About Me

Robotics Engineer

I'm a PhD candidate in Robotics at Rensselaer Polytechnic Institute, advised by Dr. John Wen.

My research interests encompass motion optimization and kinematic calibration for robot arms within manufacturing contexts. My research incorporates concepts from robotics, optimization, and control. Additionally, I have experience in semi-autonomous vehicles for search and exploration, utilizing SLAM, deep learning, and reinforcement learning methodologies.

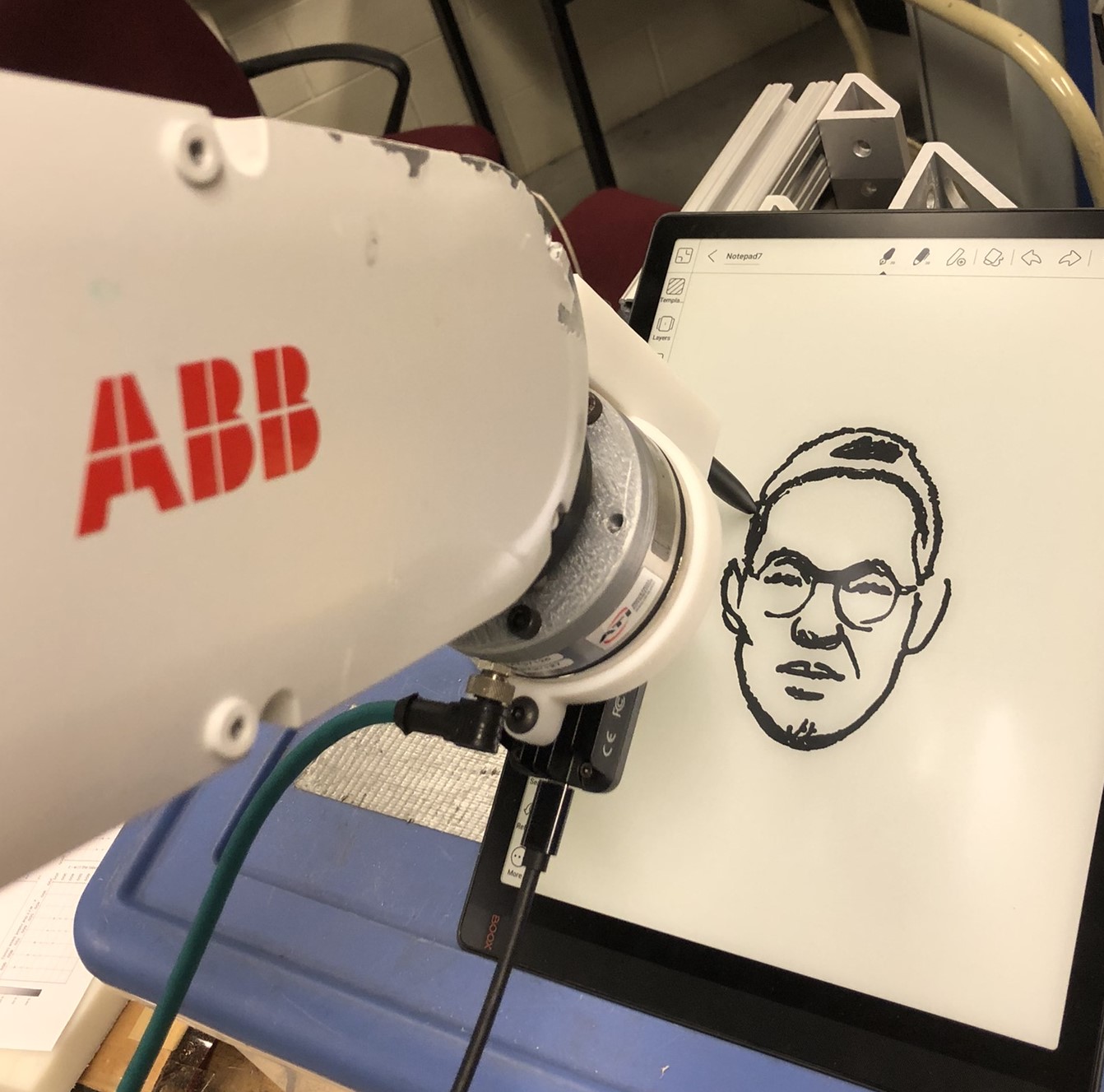

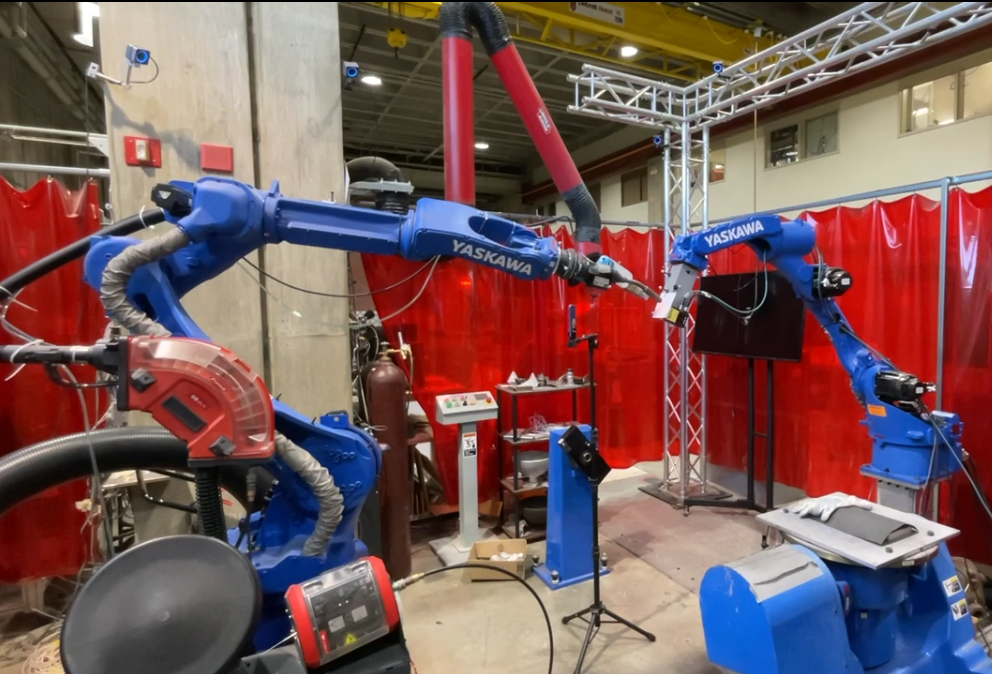

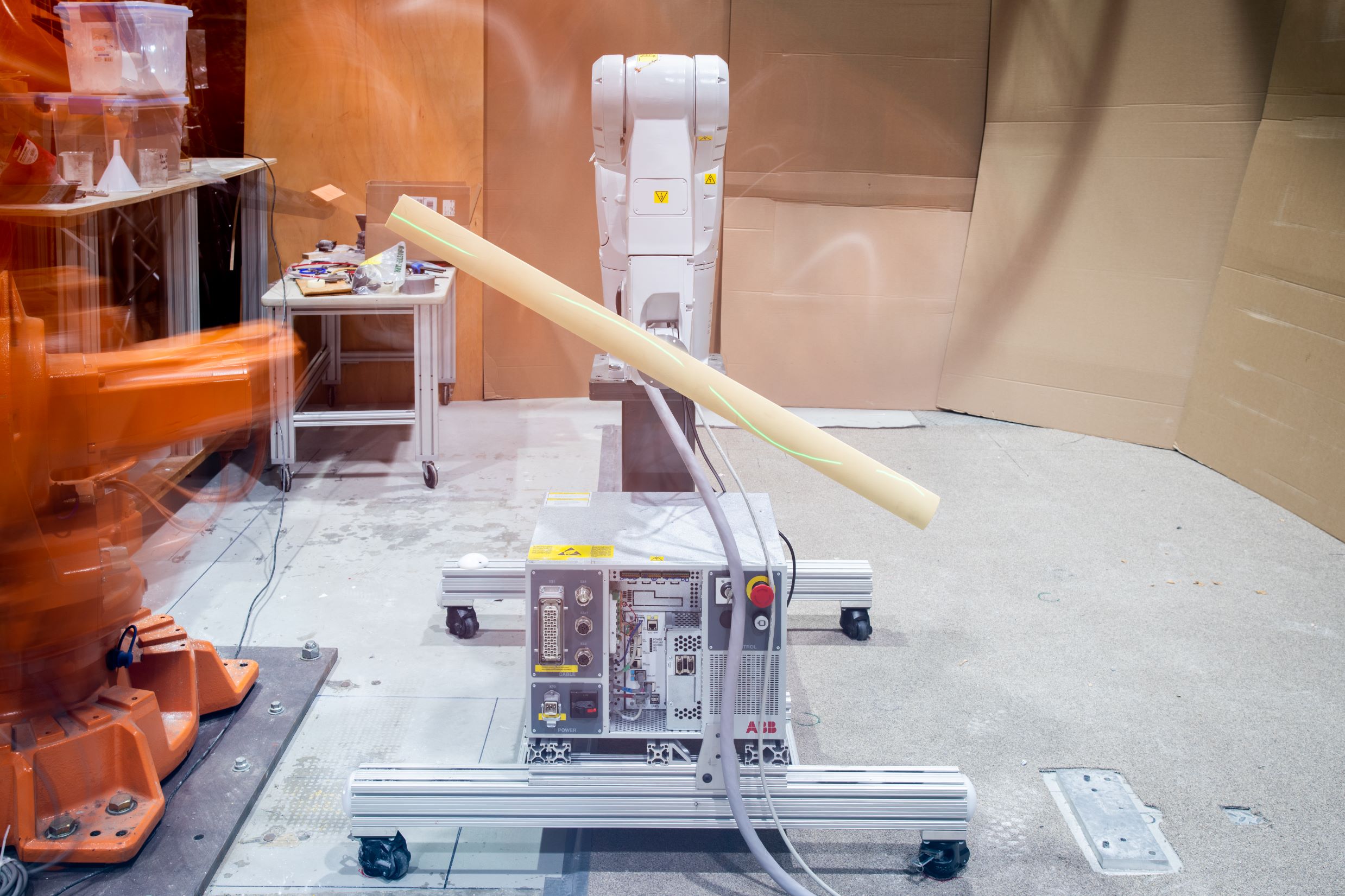

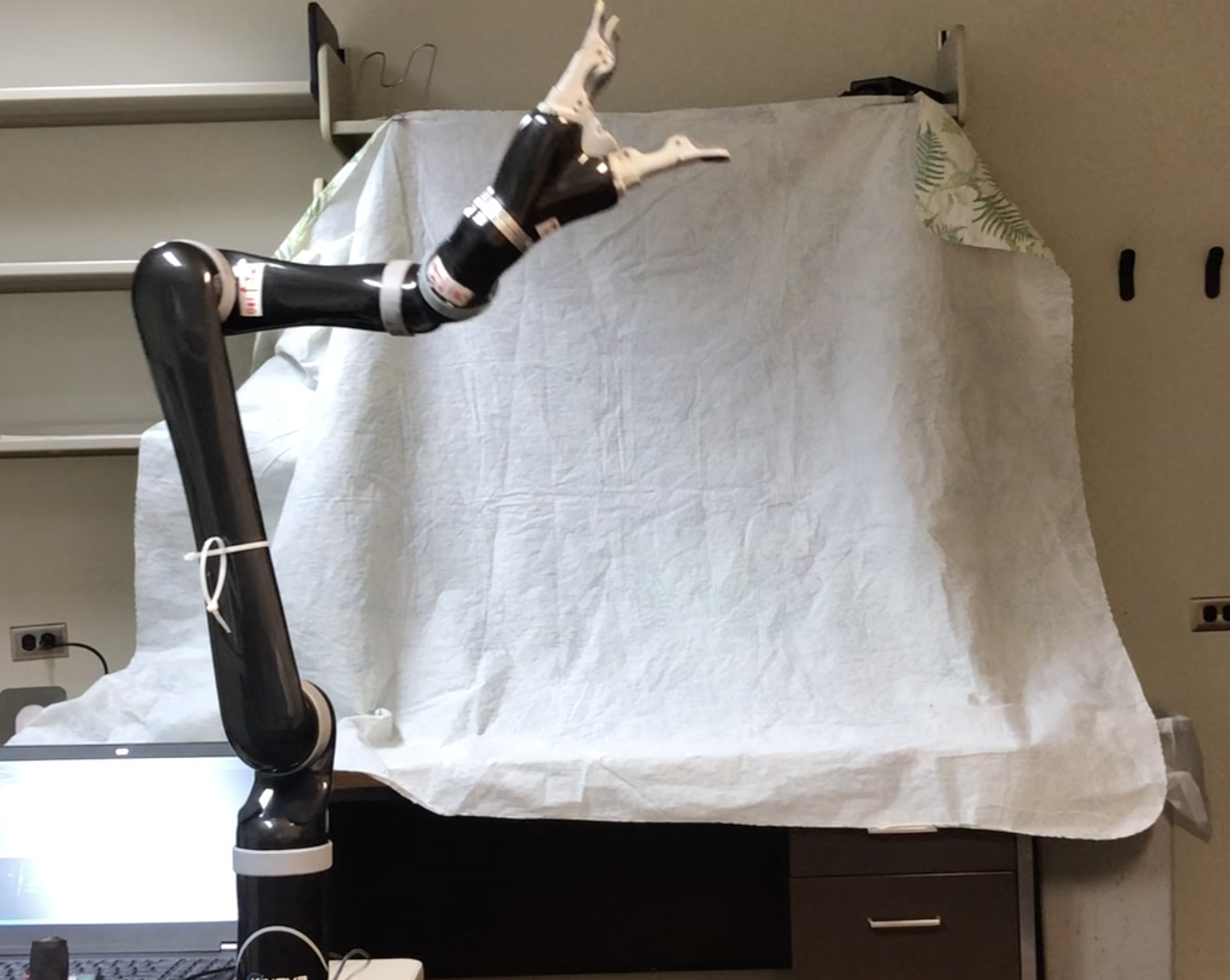

Robotics Manufacturing

Kinematic Calibration

Data-driven WAAM

Research Projects

Contact Me

luc5@rpi.edu

Location

Rensselaer Polytechnic Institute, Troy, NY

Availability

I check my email daily and respond promptly to inquiries.